This page provides step-by-step examples for using Core and Enterprise changefeeds. Creating Enterprise changefeeds is available on CockroachDB Dedicated, on CockroachDB Serverless (beta) clusters with an Enterprise license, and on CockroachDB Self-Hosted clusters with an Enterprise license. Core changefeeds are available in all products.

For a summary of Core and Enterprise changefeed features, see What is Change Data Capture?

Enterprise changefeeds can connect to the following sinks:

- Kafka

- Google Cloud Pub/Sub

- Cloud Storage (Amazon S3, Google Cloud Storage, Azure Storage)

- Webhook

See the Changefeed Sinks page for more detail on forming sink URIs and specifics on configuration.

Use the following filters to show usage examples for either Enterprise or Core changefeeds:

Create a changefeed connected to Kafka

CREATE CHANGEFEED is an Enterprise-only feature. For the Core version, see the CHANGEFEED FOR example.

In this example, you'll set up a changefeed for a single-node cluster that is connected to a Kafka sink. The changefeed will watch two tables.

If you do not already have one, request a trial Enterprise license.

Use the

cockroach start-single-nodecommand to start a single-node cluster:$ cockroach start-single-node --insecure --listen-addr=localhost --backgroundDownload and extract the Confluent Open Source platform (which includes Kafka).

Move into the extracted

confluent-<version>directory and start Confluent:$ ./bin/confluent local services startOnly

zookeeperandkafkaare needed. To troubleshoot Confluent, see their docs and the Quick Start Guide.Create two Kafka topics:

$ ./bin/kafka-topics \ --create \ --zookeeper localhost:2181 \ --replication-factor 1 \ --partitions 1 \ --topic office_dogs$ ./bin/kafka-topics \ --create \ --zookeeper localhost:2181 \ --replication-factor 1 \ --partitions 1 \ --topic employeesNote:You are expected to create any Kafka topics with the necessary number of replications and partitions. Topics can be created manually or Kafka brokers can be configured to automatically create topics with a default partition count and replication factor.

As the

rootuser, open the built-in SQL client:$ cockroach sql --insecureSet your organization name and Enterprise license key that you received via email:

> SET CLUSTER SETTING cluster.organization = '<organization name>';> SET CLUSTER SETTING enterprise.license = '<secret>';Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;Create a database called

cdc_demo:> CREATE DATABASE cdc_demo;Set the database as the default:

> SET DATABASE = cdc_demo;Create a table and add data:

> CREATE TABLE office_dogs ( id INT PRIMARY KEY, name STRING);> INSERT INTO office_dogs VALUES (1, 'Petee'), (2, 'Carl');> UPDATE office_dogs SET name = 'Petee H' WHERE id = 1;Create another table and add data:

> CREATE TABLE employees ( dog_id INT REFERENCES office_dogs (id), employee_name STRING);> INSERT INTO employees VALUES (1, 'Lauren'), (2, 'Spencer');Start the changefeed:

> CREATE CHANGEFEED FOR TABLE office_dogs, employees INTO 'kafka://localhost:9092';job_id +--------------------+ 360645287206223873 (1 row)This will start up the changefeed in the background and return the

job_id. The changefeed writes to Kafka.In a new terminal, move into the extracted

confluent-<version>directory and start watching the Kafka topics:$ ./bin/kafka-console-consumer \ --bootstrap-server=localhost:9092 \ --from-beginning \ --whitelist 'office_dogs|employees'{"after": {"id": 1, "name": "Petee H"}} {"after": {"id": 2, "name": "Carl"}} {"after": {"id": 1, "name": "Lauren", "rowid": 528514320239329281}} {"after": {"id": 2, "name": "Spencer", "rowid": 528514320239362049}}The initial scan displays the state of the tables as of when the changefeed started (therefore, the initial value of

"Petee"is omitted).Note:This example only prints the value. To print both the key and value of each message in the changefeed (e.g., to observe what happens with

DELETEs), use the--property print.key=trueflag.Back in the SQL client, insert more data:

> INSERT INTO office_dogs VALUES (3, 'Ernie');Back in the terminal where you're watching the Kafka topics, the following output has appeared:

{"after": {"id": 3, "name": "Ernie"}}When you are done, exit the SQL shell (

\q).To stop

cockroach, run:$ cockroach quit --insecureTo stop Kafka, move into the extracted

confluent-<version>directory and stop Confluent:$ ./bin/confluent local services stop

Create a changefeed connected to Kafka using Avro

CREATE CHANGEFEED is an Enterprise-only feature. For the Core version, see the CHANGEFEED FOR example.

In this example, you'll set up a changefeed for a single-node cluster that is connected to a Kafka sink and emits Avro records. The changefeed will watch two tables.

If you do not already have one, request a trial Enterprise license.

Use the

cockroach start-single-nodecommand to start a single-node cluster:$ cockroach start-single-node --insecure --listen-addr=localhost --backgroundDownload and extract the Confluent Open Source platform (which includes Kafka).

Move into the extracted

confluent-<version>directory and start Confluent:$ ./bin/confluent local services startOnly

zookeeper,kafka, andschema-registryare needed. To troubleshoot Confluent, see their docs and the Quick Start Guide.Create two Kafka topics:

$ ./bin/kafka-topics \ --create \ --zookeeper localhost:2181 \ --replication-factor 1 \ --partitions 1 \ --topic office_dogs$ ./bin/kafka-topics \ --create \ --zookeeper localhost:2181 \ --replication-factor 1 \ --partitions 1 \ --topic employeesNote:You are expected to create any Kafka topics with the necessary number of replications and partitions. Topics can be created manually or Kafka brokers can be configured to automatically create topics with a default partition count and replication factor.

As the

rootuser, open the built-in SQL client:$ cockroach sql --insecureSet your organization name and Enterprise license key that you received via email:

> SET CLUSTER SETTING cluster.organization = '<organization name>';> SET CLUSTER SETTING enterprise.license = '<secret>';Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;Create a database called

cdc_demo:> CREATE DATABASE cdc_demo;Set the database as the default:

> SET DATABASE = cdc_demo;Create a table and add data:

> CREATE TABLE office_dogs ( id INT PRIMARY KEY, name STRING);> INSERT INTO office_dogs VALUES (1, 'Petee'), (2, 'Carl');> UPDATE office_dogs SET name = 'Petee H' WHERE id = 1;Create another table and add data:

> CREATE TABLE employees ( dog_id INT REFERENCES office_dogs (id), employee_name STRING);> INSERT INTO employees VALUES (1, 'Lauren'), (2, 'Spencer');Start the changefeed:

> CREATE CHANGEFEED FOR TABLE office_dogs, employees INTO 'kafka://localhost:9092' WITH format = avro, confluent_schema_registry = 'http://localhost:8081';job_id +--------------------+ 360645287206223873 (1 row)This will start up the changefeed in the background and return the

job_id. The changefeed writes to Kafka.In a new terminal, move into the extracted

confluent-<version>directory and start watching the Kafka topics:$ ./bin/kafka-avro-console-consumer \ --bootstrap-server=localhost:9092 \ --from-beginning \ --whitelist 'office_dogs|employees'{"after":{"office_dogs":{"id":{"long":1},"name":{"string":"Petee H"}}}} {"after":{"office_dogs":{"id":{"long":2},"name":{"string":"Carl"}}}} {"after":{"employees":{"dog_id":{"long":1},"employee_name":{"string":"Lauren"},"rowid":{"long":528537452042682369}}}} {"after":{"employees":{"dog_id":{"long":2},"employee_name":{"string":"Spencer"},"rowid":{"long":528537452042747905}}}}The initial scan displays the state of the table as of when the changefeed started (therefore, the initial value of

"Petee"is omitted).Note:This example only prints the value. To print both the key and value of each message in the changefeed (e.g., to observe what happens with

DELETEs), use the--property print.key=trueflag.Back in the SQL client, insert more data:

> INSERT INTO office_dogs VALUES (3, 'Ernie');Back in the terminal where you're watching the Kafka topics, the following output has appeared:

{"after":{"office_dogs":{"id":{"long":3},"name":{"string":"Ernie"}}}}When you are done, exit the SQL shell (

\q).To stop

cockroach, run:$ cockroach quit --insecureTo stop Kafka, move into the extracted

confluent-<version>directory and stop Confluent:$ ./bin/confluent local services stop

Create a changefeed connected to a Google Cloud Pub/Sub sink

The Google Cloud Pub/Sub sink is currently in beta.

New in v22.1: In this example, you'll set up a changefeed for a single-node cluster that is connected to a Google Cloud Pub/Sub sink. The changefeed will watch a table and send messages to the sink.

You'll need access to a Google Cloud Project to set up a Pub/Sub sink. In this example, the Google Cloud CLI (gcloud) is used, but you can also complete each of these steps within your Google Cloud Console.

If you do not already have one, request a trial Enterprise license.

Use the

cockroach start-single-nodecommand to start a single-node cluster:cockroach start-single-node --insecure --listen-addr=localhost --backgroundIn this example, you'll run CockroachDB's Movr application workload to set up some data for your changefeed.

First create the schema for the workload:

cockroach workload init movr "postgresql://root@127.0.0.1:26257?sslmode=disable"Then run the workload:

cockroach workload run movr --duration=1m "postgresql://root@127.0.0.1:26257?sslmode=disable"As the

rootuser, open the built-in SQL client:$ cockroach sql --insecureSet your organization name and Enterprise license key that you received via email:

> SET CLUSTER SETTING cluster.organization = '<organization name>';> SET CLUSTER SETTING enterprise.license = '<secret>';Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;Next, you'll prepare your Pub/Sub sink.

In a new terminal window, create a Service Account attached to your Google Project:

gcloud iam service-accounts create cdc-demo --project cockroach-projectIn this example,

cdc-demowill represent the name of the service account, andcockroach-projectis the name of the Google Project.To ensure that your Service Account has the correct permissions to publish to the sink, use the following command to give the Service Account the predefined Pub/Sub Editor role:

gcloud projects add-iam-policy-binding cockroach-project --member='serviceAccount:cdc-demo@cockroach-project.iam.gserviceaccount.com' --role='roles/pubsub.editor'Create the Pub/Sub topic to which your changefeed will emit messages:

gcloud pubsub topics create movr-users --project cockroach-projectRun the following command to create a subscription within the

movr-userstopic:gcloud pubsub subscriptions create movr-users-sub --topic=movr-users --topic-project=cockroach-projectWith the topic and subscription set up, you can now download your Service Account's key. Use the following command to specify where to download the json key file (

key.json):gcloud iam service-accounts keys create key.json --iam-account=cdc-demo@cockroach-project.iam.gserviceaccount.comNext, base64 encode your credentials key:

cat key.json | base64Copy the output so that you can add it to your

CREATE CHANGEFEEDstatement in the next step. When you create your changefeed, it is necessary that the key is base64 encoded before passing it in the URI.Back in the SQL shell, create a changefeed that will emit messages to your Pub/Sub topic. Ensure that you pass the base64-encoded credentials for your Service Account and add your topic's region:

CREATE CHANGEFEED FOR TABLE users INTO 'gcpubsub://cockroach-project?region=us-east1&topic_name=movr-users&AUTH=specified&CREDENTIALS={base64-encoded key}';The output will confirm the topic where the changefeed will emit messages to.

job_id ---------------------- 756641304964792321 (1 row) NOTICE: changefeed will emit to topic movr-usersTo view all the messages delivered to your topic, you can use the Cloud Console. You'll see the messages emitted to the

movr-users-subsubscription.

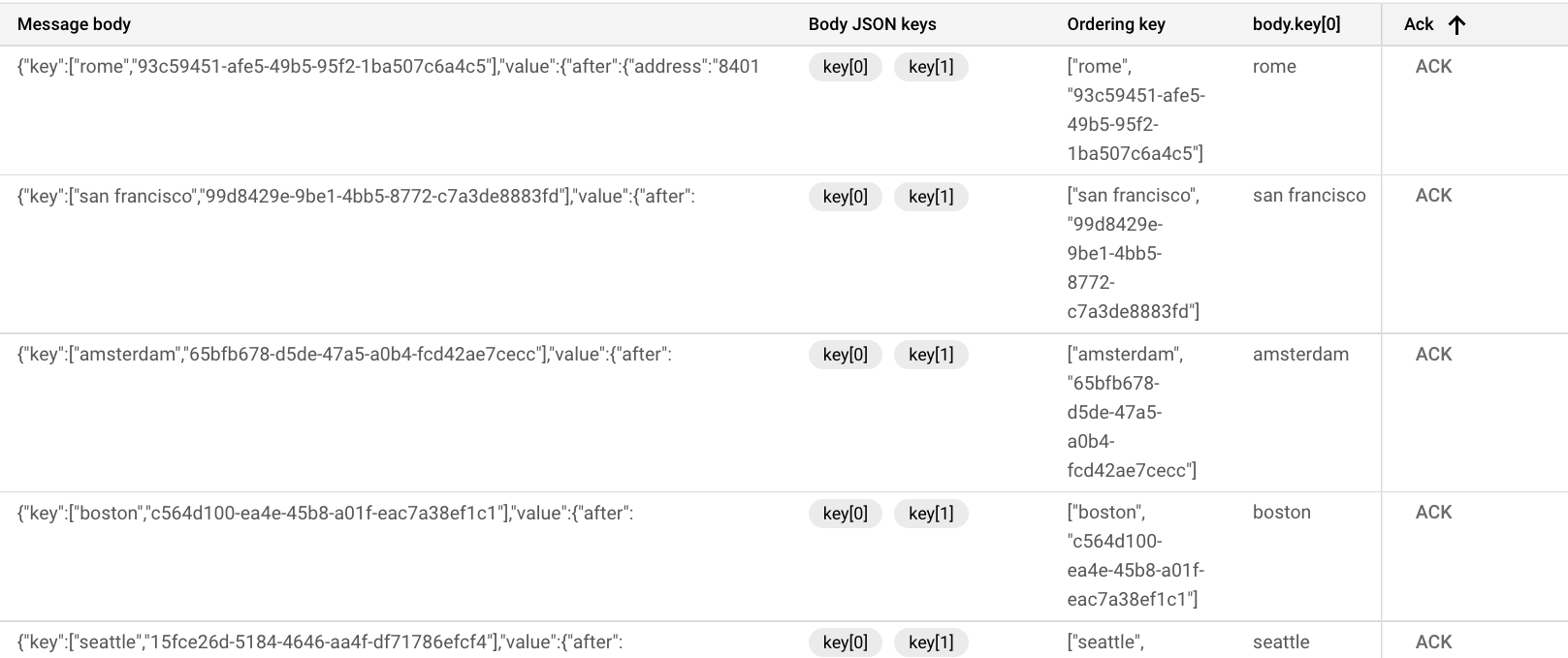

To view published messages from your terminal, run the following command:

gcloud pubsub subscriptions pull movr-users-sub --auto-ack --limit=10This command will only pull these messages once per subscription. For example, if you ran this command again you would receive 10 different messages in your output. To receive more than one message at a time, pass the

--limitflag. For more details, see the gcloud pubsub subscriptions pull documentation.┌──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┬──────────────────┬─────────────────────────────────────────────────────────┬────────────┬──────────────────┐ │ DATA │ MESSAGE_ID │ ORDERING_KEY │ ATTRIBUTES │ DELIVERY_ATTEMPT │ ├──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┼──────────────────┼─────────────────────────────────────────────────────────┼────────────┼──────────────────┤ │ {"key":["boston","40ef7cfa-5e16-4bd3-9e14-2f23407a66df"],"value":{"after":{"address":"14980 Gentry Plains Apt. 64","city":"boston","credit_card":"2466765790","id":"40ef7cfa-5e16-4bd3-9e14-2f23407a66df","name":"Vickie Fitzpatrick"}},"topic":"movr-users"} │ 4466153049158588 │ ["boston", "40ef7cfa-5e16-4bd3-9e14-2f23407a66df"] │ │ │ │ {"key":["los angeles","947ae147-ae14-4800-8000-00000000001d"],"value":{"after":{"address":"35627 Chelsey Tunnel Suite 94","city":"los angeles","credit_card":"2099932769","id":"947ae147-ae14-4800-8000-00000000001d","name":"Kenneth Barnes"}},"topic":"movr-users"} │ 4466144577818136 │ ["los angeles", "947ae147-ae14-4800-8000-00000000001d"] │ │ │ │ {"key":["amsterdam","c28f5c28-f5c2-4000-8000-000000000026"],"value":{"after":{"address":"14729 Karen Radial","city":"amsterdam","credit_card":"5844236997","id":"c28f5c28-f5c2-4000-8000-000000000026","name":"Maria Weber"}},"topic":"movr-users"} │ 4466151194002912 │ ["amsterdam", "c28f5c28-f5c2-4000-8000-000000000026"] │ │ │ │ {"key":["new york","6c8ab772-584a-439d-b7b4-fda37767c74c"],"value":{"after":{"address":"34196 Roger Row Suite 6","city":"new york","credit_card":"3117945420","id":"6c8ab772-584a-439d-b7b4-fda37767c74c","name":"James Lang"}},"topic":"movr-users"} │ 4466147099992681 │ ["new york", "6c8ab772-584a-439d-b7b4-fda37767c74c"] │ │ │ │ {"key":["boston","c56dab0a-63e7-4fbb-a9af-54362c481c41"],"value":{"after":{"address":"83781 Ross Overpass","city":"boston","credit_card":"7044597874","id":"c56dab0a-63e7-4fbb-a9af-54362c481c41","name":"Mark Butler"}},"topic":"movr-users"} │ 4466150752442731 │ ["boston", "c56dab0a-63e7-4fbb-a9af-54362c481c41"] │ │ │ │ {"key":["amsterdam","f27e09d5-d7cd-4f88-8b65-abb910036f45"],"value":{"after":{"address":"77153 Donald Road Apt. 62","city":"amsterdam","credit_card":"7531160744","id":"f27e09d5-d7cd-4f88-8b65-abb910036f45","name":"Lisa Sandoval"}},"topic":"movr-users"} │ 4466147182359256 │ ["amsterdam", "f27e09d5-d7cd-4f88-8b65-abb910036f45"] │ │ │ │ {"key":["new york","46d200c0-6924-4cc7-b3c9-3398997acb84"],"value":{"after":{"address":"92843 Carlos Grove","city":"new york","credit_card":"8822366402","id":"46d200c0-6924-4cc7-b3c9-3398997acb84","name":"Mackenzie Malone"}},"topic":"movr-users"} │ 4466142864542016 │ ["new york", "46d200c0-6924-4cc7-b3c9-3398997acb84"] │ │ │ │ {"key":["boston","52ecbb26-0eab-4e0b-a160-90caa6a7d350"],"value":{"after":{"address":"95044 Eric Corner Suite 33","city":"boston","credit_card":"3982363300","id":"52ecbb26-0eab-4e0b-a160-90caa6a7d350","name":"Brett Porter"}},"topic":"movr-users"} │ 4466152539161631 │ ["boston", "52ecbb26-0eab-4e0b-a160-90caa6a7d350"] │ │ │ │ {"key":["amsterdam","ae147ae1-47ae-4800-8000-000000000022"],"value":{"after":{"address":"88194 Angela Gardens Suite 94","city":"amsterdam","credit_card":"4443538758","id":"ae147ae1-47ae-4800-8000-000000000022","name":"Tyler Dalton"}},"topic":"movr-users"} │ 4466151398997150 │ ["amsterdam", "ae147ae1-47ae-4800-8000-000000000022"] │ │ │ │ {"key":["paris","dc28f5c2-8f5c-4800-8000-00000000002b"],"value":{"after":{"address":"2058 Rodriguez Stream","city":"paris","credit_card":"9584502537","id":"dc28f5c2-8f5c-4800-8000-00000000002b","name":"Tony Ortiz"}},"topic":"movr-users"} │ 4466146372222914 │ ["paris", "dc28f5c2-8f5c-4800-8000-00000000002b"] │ │ │ └──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┴──────────────────┴─────────────────────────────────────────────────────────┴────────────┴──────────────────┘

Create a changefeed connected to a cloud storage sink

CREATE CHANGEFEED is an Enterprise-only feature. For the Core version, see the CHANGEFEED FOR example.

In this example, you'll set up a changefeed for a single-node cluster that is connected to an AWS S3 sink. The changefeed watches two tables. Note that you can set up changefeeds for any of these cloud storage providers.

If you do not already have one, request a trial Enterprise license.

Use the

cockroach start-single-nodecommand to start a single-node cluster:$ cockroach start-single-node --insecure --listen-addr=localhost --backgroundAs the

rootuser, open the built-in SQL client:$ cockroach sql --insecureSet your organization name and Enterprise license key that you received via email:

> SET CLUSTER SETTING cluster.organization = '<organization name>';> SET CLUSTER SETTING enterprise.license = '<secret>';Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;Create a database called

cdc_demo:> CREATE DATABASE cdc_demo;Set the database as the default:

> SET DATABASE = cdc_demo;Create a table and add data:

> CREATE TABLE office_dogs ( id INT PRIMARY KEY, name STRING);> INSERT INTO office_dogs VALUES (1, 'Petee'), (2, 'Carl');> UPDATE office_dogs SET name = 'Petee H' WHERE id = 1;Create another table and add data:

> CREATE TABLE employees ( dog_id INT REFERENCES office_dogs (id), employee_name STRING);> INSERT INTO employees VALUES (1, 'Lauren'), (2, 'Spencer');Start the changefeed:

> CREATE CHANGEFEED FOR TABLE office_dogs, employees INTO 's3://example-bucket-name/test?AWS_ACCESS_KEY_ID=enter_key-here&AWS_SECRET_ACCESS_KEY=enter_key_here' with updated, resolved='10s';job_id +--------------------+ 360645287206223873 (1 row)This will start up the changefeed in the background and return the

job_id. The changefeed writes to AWS.Monitor your changefeed on the DB Console. For more information, see Changefeeds Dashboard.

When you are done, exit the SQL shell (

\q).To stop

cockroach, run:$ cockroach quit --insecure

Create a changefeed connected to a webhook sink

CREATE CHANGEFEED is an enterprise-only feature. For the Core version, see the CHANGEFEED FOR example.

The webhook sink is currently in beta — see usage considerations, available parameters, and options for more information.

In this example, you'll set up a changefeed for a single-node cluster that is connected to a local HTTP server via a webhook. For this example, you'll use an example HTTP server to test out the webhook sink.

If you do not already have one, request a trial Enterprise license.

Use the

cockroach start-single-nodecommand to start a single-node cluster:$ cockroach start-single-node --insecure --listen-addr=localhost --backgroundIn this example, you'll run CockroachDB's Movr application workload to set up some data for your changefeed.

First create the schema for the workload:

cockroach workload init movr "postgresql://root@127.0.0.1:26257?sslmode=disable"Then run the workload:

cockroach workload run movr --duration=1m "postgresql://root@127.0.0.1:26257?sslmode=disable"As the

rootuser, open the built-in SQL client:$ cockroach sql --insecureSet your organization name and Enterprise license key that you received via email:

> SET CLUSTER SETTING cluster.organization = '<organization name>';> SET CLUSTER SETTING enterprise.license = '<secret>';Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;In a separate terminal window, set up your HTTP server. Clone the test repository:

git clone https://github.com/cockroachlabs/cdc-webhook-sink-test-server.gitcd cdc-webhook-sink-test-server/go-https-serverNext make the script executable and then run the server (passing a specific port if preferred, otherwise it will default to

:3000):chmod +x ./server.sh./server.sh <port>Back in your SQL shell, run the following statement to create a changefeed that emits to your webhook sink:

CREATE CHANGEFEED FOR TABLE movr.vehicles INTO 'webhook-https://localhost:3000?insecure_tls_skip_verify=true' WITH updated;You set up a changefeed on the

vehiclestable, which emits changefeed messages to the local HTTP server.See the options table for more information on the options available for creating your changefeed to a webhook sink.

job_id ---------------------- 687842491801632769 (1 row)In the terminal where your HTTP server is running, you'll receive output similar to:

2021/08/24 14:00:21 {"payload":[{"after":{"city":"rome","creation_time":"2019-01-02T03:04:05","current_location":"39141 Travis Curve Suite 87","ext":{"brand":"Schwinn","color":"red"},"id":"d7b18299-c0c4-4304-9ef7-05ae46fd5ee1","dog_owner_id":"5d0c85b5-8866-47cf-a6bc-d032f198e48f","status":"in_use","type":"bike"},"key":["rome","d7b18299-c0c4-4304-9ef7-05ae46fd5ee1"],"topic":"vehicles","updated":"1629813621680097993.0000000000"}],"length":1} 2021/08/24 14:00:22 {"payload":[{"after":{"city":"san francisco","creation_time":"2019-01-02T03:04:05","current_location":"84888 Wallace Wall","ext":{"color":"black"},"id":"020cf7f4-6324-48a0-9f74-6c9010fb1ab4","dog_owner_id":"b74ea421-fcaf-4d80-9dcc-d222d49bdc17","status":"available","type":"scooter"},"key":["san francisco","020cf7f4-6324-48a0-9f74-6c9010fb1ab4"],"topic":"vehicles","updated":"1629813621680097993.0000000000"}],"length":1} 2021/08/24 14:00:22 {"payload":[{"after":{"city":"san francisco","creation_time":"2019-01-02T03:04:05","current_location":"3893 Dunn Fall Apt. 11","ext":{"color":"black"},"id":"21b2ec54-81ad-4af7-a76d-6087b9c7f0f8","dog_owner_id":"8924c3af-ea6e-4e7e-b2c8-2e318f973393","status":"lost","type":"scooter"},"key":["san francisco","21b2ec54-81ad-4af7-a76d-6087b9c7f0f8"],"topic":"vehicles","updated":"1629813621680097993.0000000000"}],"length":1}For more detail on emitted changefeed messages, see responses.

Create a changefeed on a table with column families

CREATE CHANGEFEED is an Enterprise-only feature. For the Core version, see the CHANGEFEED FOR example.

New in v22.1: In this example, you'll set up changefeeds on two tables that have column families. You'll use a single-node cluster sending changes to a webhook sink for this example, but you can use any changefeed sink to work with tables that include column families.

For more detail on a changefeed's output when targeting tables with column families, see Changefeeds on tables with column families.

If you do not already have one, request a trial Enterprise license.

Use the

cockroach start-single-nodecommand to start a single-node cluster:cockroach start-single-node --insecure --listen-addr=localhost --backgroundAs the

rootuser, open the built-in SQL client:cockroach sql --insecureSet your organization and Enterprise license key that you received via email:

SET CLUSTER SETTING cluster.organization = '<organization name>';SET CLUSTER SETTING enterprise.license = '<secret>';Enable the

kv.rangefeed.enabledcluster setting:SET CLUSTER SETTING kv.rangefeed.enabled = true;In a separate terminal window, set up your HTTP server. Clone the test repository:

git clone https://github.com/cockroachlabs/cdc-webhook-sink-test-server.gitcd cdc-webhook-sink-test-server/go-https-serverNext make the script executable and then run the server (passing a specific port if preferred, otherwise it will default to

:3000):chmod +x ./server.sh./server.sh <port>Back in your SQL shell, create a database called

cdc_demo:CREATE DATABASE cdc_demo;Set the database as the default:

USE cdc_demo;Create a table with two column families:

CREATE TABLE office_dogs ( id INT PRIMARY KEY, name STRING, dog_owner STRING, FAMILY dogs (id, name), FAMILY employee (dog_owner) );Insert some data into the table:

INSERT INTO office_dogs (id, name, dog_owner) VALUES (1, 'Petee', 'Lauren'), (2, 'Max', 'Taylor'), (3, 'Patch', 'Sammy'), (4, 'Roach', 'Ashley');Create a second table that also defines column families:

CREATE TABLE office_plants ( id INT PRIMARY KEY, plant_name STRING, office_floor INT, safe_for_dogs BOOL, FAMILY dog_friendly (office_floor, safe_for_dogs), FAMILY plant (id, plant_name) );Insert some data into

office_plants:INSERT INTO office_plants (id, plant_name, office_floor, safe_for_dogs) VALUES (1, 'Sansevieria', 11, false), (2, 'Monstera', 11, false), (3, 'Peperomia', 10, true), (4, 'Jade', 9, true);Create a changefeed on the

office_dogstable targeting one of the column families. Use theFAMILYkeyword in theCREATEstatement:CREATE CHANGEFEED FOR TABLE office_dogs FAMILY employee INTO 'webhook-https://localhost:3000?insecure_tls_skip_verify=true';You'll receive one message for each of the inserts that affects the specified column family:

{"payload":[{"after":{"dog_owner":"Lauren"},"key":[1],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"dog_owner":"Sammy"},"key":[3],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"dog_owner":"Taylor"},"key":[2],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"dog_owner":"Ashley"},"key":[4],"topic":"office_dogs.employee"}],"length":1}Note:The ordering of messages is not guaranteed. That is, you may not always receive messages for the same row, or even the same change to the same row, next to each other.

Alternatively, create a changefeed using the

FAMILYkeyword across two tables:CREATE CHANGEFEED FOR TABLE office_dogs FAMILY employee, TABLE office_plants FAMILY dog_friendly INTO 'webhook-https://localhost:3000?insecure_tls_skip_verify=true';You'll receive one message for each insert that affects the specified column families:

{"payload":[{"after":{"dog_owner":"Lauren"},"key":[1],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"office_floor":11,"safe_for_dogs":false},"key":[1],"topic":"office_plants.dog_friendly"}],"length":1} {"payload":[{"after":{"office_floor":9,"safe_for_dogs":true},"key":[4],"topic":"office_plants.dog_friendly"}],"length":1} {"payload":[{"after":{"dog_owner":"Taylor"},"key":[2],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"office_floor":11,"safe_for_dogs":false},"key":[2],"topic":"office_plants.dog_friendly"}],"length":1} {"payload":[{"after":{"office_floor":10,"safe_for_dogs":true},"key":[3],"topic":"office_plants.dog_friendly"}],"length":1} {"payload":[{"after":{"dog_owner":"Ashley"},"key":[4],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"dog_owner":"Sammy"},"key":[3],"topic":"office_dogs.employee"}],"length":1}This allows you to define particular column families for the changefeed to target, without necessarily specifying every family in a table.

Note:To create a changefeed specifying two families on one table, ensure that you define the table and family in both instances:

CREATE CHANGEFEED FOR TABLE office_dogs FAMILY employee, TABLE office_dogs FAMILY dogs INTO {sink};To create a changefeed that emits messages for all column families in a table, use the

split_column_familiesoption:CREATE CHANGEFEED FOR TABLE office_dogs INTO 'webhook-https://localhost:3000?insecure_tls_skip_verify=true' with split_column_families;You'll receive output for both of the column families in the

office_dogstable:{"payload":[{"after":{"id":1,"name":"Petee"},"key":[1],"topic":"office_dogs.dogs"}],"length":1} {"payload":[{"after":{"dog_owner":"Lauren"},"key":[1],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"id":2,"name":"Max"},"key":[2],"topic":"office_dogs.dogs"}],"length":1} {"payload":[{"after":{"dog_owner":"Taylor"},"key":[2],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"id":3,"name":"Patch"},"key":[3],"topic":"office_dogs.dogs"}],"length":1} {"payload":[{"after":{"dog_owner":"Sammy"},"key":[3],"topic":"office_dogs.employee"}],"length":1} {"payload":[{"after":{"id":4,"name":"Roach"},"key":[4],"topic":"office_dogs.dogs"}],"length":1} {"payload":[{"after":{"dog_owner":"Ashley"},"key":[4],"topic":"office_dogs.employee"}],"length":1}Update one of the values in the table:

UPDATE office_dogs SET name = 'Izzy' WHERE id = 4;This only affects one column family, which means you'll receive one message:

{"payload":[{"after":{"id":4,"name":"Izzy"},"key":[4],"topic":"office_dogs.dogs"}],"length":1}

Core changefeeds stream row-level changes to a client until the underlying SQL connection is closed.

Create a Core changefeed

In this example, you'll set up a core changefeed for a single-node cluster.

In a terminal window, start

cockroach:$ cockroach start-single-node \ --insecure \ --listen-addr=localhost \ --backgroundAs the

rootuser, open the built-in SQL client:$ cockroach sql \ --url="postgresql://root@127.0.0.1:26257?sslmode=disable" \ --format=csvNote:Because core changefeeds return results differently than other SQL statements, they require a dedicated database connection with specific settings around result buffering. In normal operation, CockroachDB improves performance by buffering results server-side before returning them to a client; however, result buffering is automatically turned off for core changefeeds. Core changefeeds also have different cancellation behavior than other queries: they can only be canceled by closing the underlying connection or issuing a

CANCEL QUERYstatement on a separate connection. Combined, these attributes of changefeeds mean that applications should explicitly create dedicated connections to consume changefeed data, instead of using a connection pool as most client drivers do by default.Note:To determine how wide the columns need to be, the default

tabledisplay format incockroach sqlbuffers the results it receives from the server before printing them to the console. When consuming core changefeed data usingcockroach sql, it's important to use a display format likecsvthat does not buffer its results. To set the display format, use the--format=csvflag when starting the built-in SQL client, or set the\set display_format=csvoption once the SQL client is open.Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;Create table

foo:> CREATE TABLE foo (a INT PRIMARY KEY);Insert a row into the table:

> INSERT INTO foo VALUES (0);Start the core changefeed:

> EXPERIMENTAL CHANGEFEED FOR foo;table,key,value foo,[0],"{""after"": {""a"": 0}}"In a new terminal, add another row:

$ cockroach sql --insecure -e "INSERT INTO foo VALUES (1)"Back in the terminal where the core changefeed is streaming, the following output has appeared:

foo,[1],"{""after"": {""a"": 1}}"Note that records may take a couple of seconds to display in the core changefeed.

To stop streaming the changefeed, enter CTRL+C into the terminal where the changefeed is running.

To stop

cockroach, run:$ cockroach quit --insecure

Create a Core changefeed using Avro

In this example, you'll set up a core changefeed for a single-node cluster that emits Avro records. CockroachDB's Avro binary encoding convention uses the Confluent Schema Registry to store Avro schemas.

Use the

cockroach start-single-nodecommand to start a single-node cluster:$ cockroach start-single-node \ --insecure \ --listen-addr=localhost \ --backgroundDownload and extract the Confluent Open Source platform.

Move into the extracted

confluent-<version>directory and start Confluent:$ ./bin/confluent local services startOnly

zookeeper,kafka, andschema-registryare needed. To troubleshoot Confluent, see their docs and the Quick Start Guide.As the

rootuser, open the built-in SQL client:$ cockroach sql --url="postgresql://root@127.0.0.1:26257?sslmode=disable" --format=csvNote:Because core changefeeds return results differently than other SQL statements, they require a dedicated database connection with specific settings around result buffering. In normal operation, CockroachDB improves performance by buffering results server-side before returning them to a client; however, result buffering is automatically turned off for core changefeeds. Core changefeeds also have different cancellation behavior than other queries: they can only be canceled by closing the underlying connection or issuing a

CANCEL QUERYstatement on a separate connection. Combined, these attributes of changefeeds mean that applications should explicitly create dedicated connections to consume changefeed data, instead of using a connection pool as most client drivers do by default.Note:To determine how wide the columns need to be, the default

tabledisplay format incockroach sqlbuffers the results it receives from the server before printing them to the console. When consuming core changefeed data usingcockroach sql, it's important to use a display format likecsvthat does not buffer its results. To set the display format, use the--format=csvflag when starting the built-in SQL client, or set the\set display_format=csvoption once the SQL client is open.Enable the

kv.rangefeed.enabledcluster setting:> SET CLUSTER SETTING kv.rangefeed.enabled = true;Create table

bar:> CREATE TABLE bar (a INT PRIMARY KEY);Insert a row into the table:

> INSERT INTO bar VALUES (0);Start the core changefeed:

> EXPERIMENTAL CHANGEFEED FOR bar WITH format = avro, confluent_schema_registry = 'http://localhost:8081';table,key,value bar,\000\000\000\000\001\002\000,\000\000\000\000\002\002\002\000In a new terminal, add another row:

$ cockroach sql --insecure -e "INSERT INTO bar VALUES (1)"Back in the terminal where the core changefeed is streaming, the output will appear:

bar,\000\000\000\000\001\002\002,\000\000\000\000\002\002\002\002Note that records may take a couple of seconds to display in the core changefeed.

To stop streaming the changefeed, enter CTRL+C into the terminal where the changefeed is running.

To stop

cockroach, run:$ cockroach quit --insecureTo stop Confluent, move into the extracted

confluent-<version>directory and stop Confluent:$ ./bin/confluent local services stopTo terminate all Confluent processes, use:

$ ./bin/confluent local destroy

For further information on Core changefeeds, see EXPERIMENTAL CHANGEFEED FOR.

Create a Core changefeed on a table with column families

New in v22.1: In this example, you'll set up Core changefeeds on two tables that have column families. You'll use a single-node cluster with the Core changefeed sending changes to the client.

For more detail on a changefeed's output when targeting tables with column families, see Changefeeds on tables with column families.

Use the

cockroach start-single-nodecommand to start a single-node cluster:cockroach start-single-node --insecure --listen-addr=localhost --backgroundAs the

rootuser, open the built-in SQL client:cockroach sql --url="postgresql://root@127.0.0.1:26257?sslmode=disable" --format=csvEnable the

kv.rangefeed.enabledcluster setting:SET CLUSTER SETTING kv.rangefeed.enabled = true;Create a database called

cdc_demo:CREATE DATABASE cdc_demo;Set the database as the default:

USE cdc_demo;Create a table with two column families:

CREATE TABLE office_dogs ( id INT PRIMARY KEY, name STRING, dog_owner STRING, FAMILY dogs (id, name), FAMILY employee (dog_owner) );Insert some data into the table:

INSERT INTO office_dogs (id, name, dog_owner) VALUES (1, 'Petee', 'Lauren'), (2, 'Max', 'Taylor'), (3, 'Patch', 'Sammy'), (4, 'Roach', 'Ashley');Create another table that also defines two column families:

CREATE TABLE office_plants ( id INT PRIMARY KEY, plant_name STRING, office_floor INT, safe_for_dogs BOOL, FAMILY dog_friendly (office_floor, safe_for_dogs), FAMILY plant (id, plant_name) );Insert some data into

office_plants:INSERT INTO office_plants (id, plant_name, office_floor, safe_for_dogs) VALUES (1, 'Sansevieria', 11, false), (2, 'Monstera', 11, false), (3, 'Peperomia', 10, true), (4, 'Jade', 9, true);Create a changefeed on the

office_dogstable targeting one of the column families. Use theFAMILYkeyword in the statement:EXPERIMENTAL CHANGEFEED FOR TABLE office_dogs FAMILY employee;You'll receive one message for each of the inserts that affects the specified column family:

table,key,value office_dogs.employee,[1],"{""after"": {""owner"": ""Lauren""}}" office_dogs.employee,[2],"{""after"": {""owner"": ""Taylor""}}" office_dogs.employee,[3],"{""after"": {""owner"": ""Sammy""}}" office_dogs.employee,[4],"{""after"": {""owner"": ""Ashley""}}"Note:The ordering of messages is not guaranteed. That is, you may not always receive messages for the same row, or even the same change to the same row, next to each other.

Alternatively, create a changefeed using the

FAMILYkeyword across two tables:EXPERIMENTAL CHANGEFEED FOR TABLE office_dogs FAMILY employee, TABLE office_plants FAMILY dog_friendly;You'll receive one message for each insert that affects the specified column families:

table,key,value office_plants.dog_friendly,[1],"{""after"": {""office_floor"": 11, ""safe_for_dogs"": false}}" office_plants.dog_friendly,[2],"{""after"": {""office_floor"": 11, ""safe_for_dogs"": false}}" office_plants.dog_friendly,[3],"{""after"": {""office_floor"": 10, ""safe_for_dogs"": true}}" office_plants.dog_friendly,[4],"{""after"": {""office_floor"": 9, ""safe_for_dogs"": true}}" office_dogs.employee,[1],"{""after"": {""dog_owner"": ""Lauren""}}" office_dogs.employee,[2],"{""after"": {""dog_owner"": ""Taylor""}}" office_dogs.employee,[3],"{""after"": {""dog_owner"": ""Sammy""}}" office_dogs.employee,[4],"{""after"": {""dog_owner"": ""Ashley""}}"This allows you to define particular column families for the changefeed to target, without necessarily specifying every family in a table.

Note:To create a changefeed specifying two families on one table, ensure that you define the table and family in both instances:

EXPERIMENTAL CHANGEFEED FOR TABLE office_dogs FAMILY employee, TABLE office_dogs FAMILY dogs;To create a changefeed that emits messages for all column families in a table, use the

split_column_familiesoption:EXPERIMENTAL CHANGEFEED FOR TABLE office_dogs WITH split_column_families;In your other terminal window, insert some more values:

cockroach sql --insecure -e "INSERT INTO cdc_demo.office_dogs (id, name, dog_owner) VALUES (5, 'Daisy', 'Cameron'), (6, 'Sage', 'Blair'), (7, 'Bella', 'Ellis');"Your changefeed will output the following:

table,key,value office_dogs.dogs,[1],"{""after"": {""id"": 1, ""name"": ""Petee""}}" office_dogs.employee,[1],"{""after"": {""owner"": ""Lauren""}}" office_dogs.dogs,[2],"{""after"": {""id"": 2, ""name"": ""Max""}}" office_dogs.employee,[2],"{""after"": {""owner"": ""Taylor""}}" office_dogs.dogs,[3],"{""after"": {""id"": 3, ""name"": ""Patch""}}" office_dogs.employee,[3],"{""after"": {""owner"": ""Sammy""}}" office_dogs.dogs,[4],"{""after"": {""id"": 4, ""name"": ""Roach""}}" office_dogs.employee,[4],"{""after"": {""owner"": ""Ashley""}}" office_dogs.dogs,[5],"{""after"": {""id"": 5, ""name"": ""Daisy""}}" office_dogs.employee,[5],"{""after"": {""owner"": ""Cameron""}}" office_dogs.dogs,[6],"{""after"": {""id"": 6, ""name"": ""Sage""}}" office_dogs.employee,[6],"{""after"": {""owner"": ""Blair""}}" office_dogs.dogs,[7],"{""after"": {""id"": 7, ""name"": ""Bella""}}" office_dogs.employee,[7],"{""after"": {""owner"": ""Ellis""}}"In your other terminal window, update one of the values in the table:

cockroach sql --insecure -e "UPDATE cdc_demo.office_dogs SET name = 'Izzy' WHERE id = 4;"This only affects one column family, which means you'll receive one message:

office_dogs.dogs,[4],"{""after"": {""id"": 4, ""name"": ""Izzy""}}"